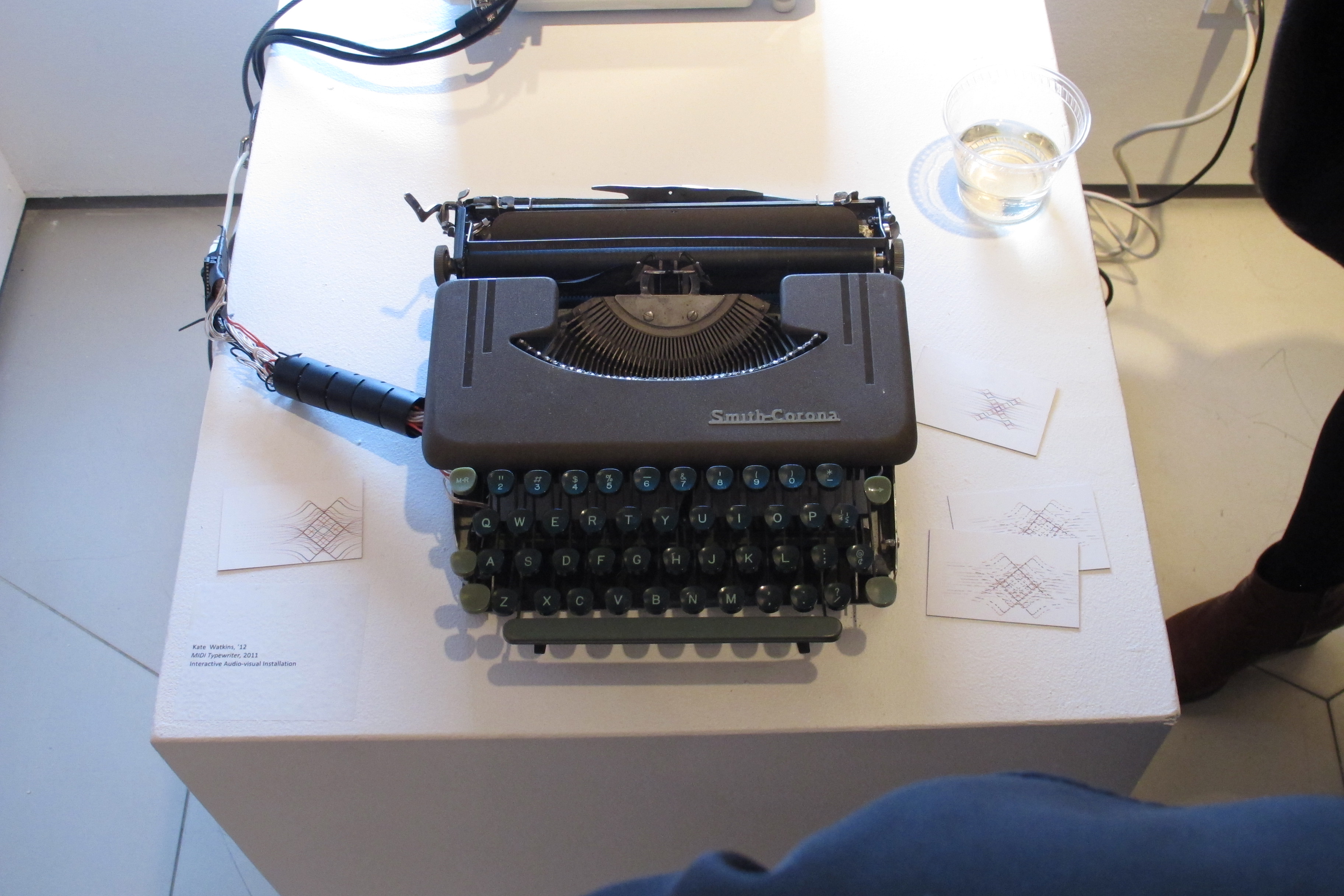

The music instrument that I am proposing offers a new form of a hybrid performative experience. The project revolves around patterns: patterns of language, music and visual representation of the two. By analyzing the frequency of each letter key on the typewriter, then grouping them in appropriate sectors that are then converted to a range of MIDI notes, my goal is to create a performative experience where individuals discover the connection between language and music as they play and interact with the MIDI typewriter interface. The visual output will be mapped in a grid similar to a digital MIDI sequencer piano roll notation, where the frequency level appears along the x-axis and the duration moves across the y-axis. My goal is to make the graphical score connect the meaning between the language that’s being typed and the MIDI notes that are being outputted.

Parsons The New School for Design Alumni Exhibition -- This musical instrument will be installed at Aronson Gallery (66 5th Ave @ W13th St. - October 13th through the 19th)

Design Questions

How do I provide a useful tool that will not only offer exploration for the user to make music, but also discover how music and language is intersected through this new hybrid experience? The question revolves around how music is broken up and how language is broken up - How can I make them intersect in an effective and interesting way, providing a useful tool that will not only offer exploration for the user to make music, but also providing another layer where he/she can discover how music and language intersect through this new hybrid interface. How can I use the different letters on the typewriter to represent different notes?

Elements + Process

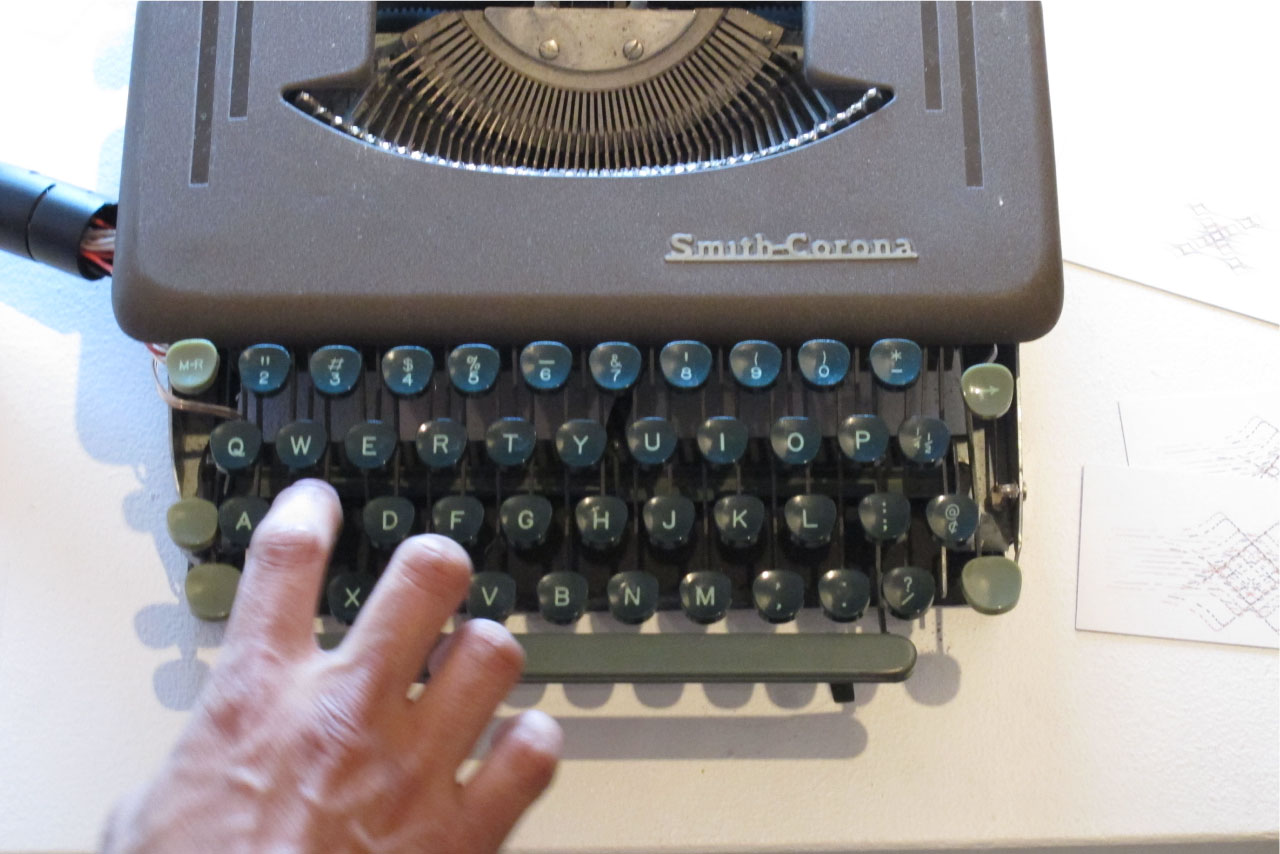

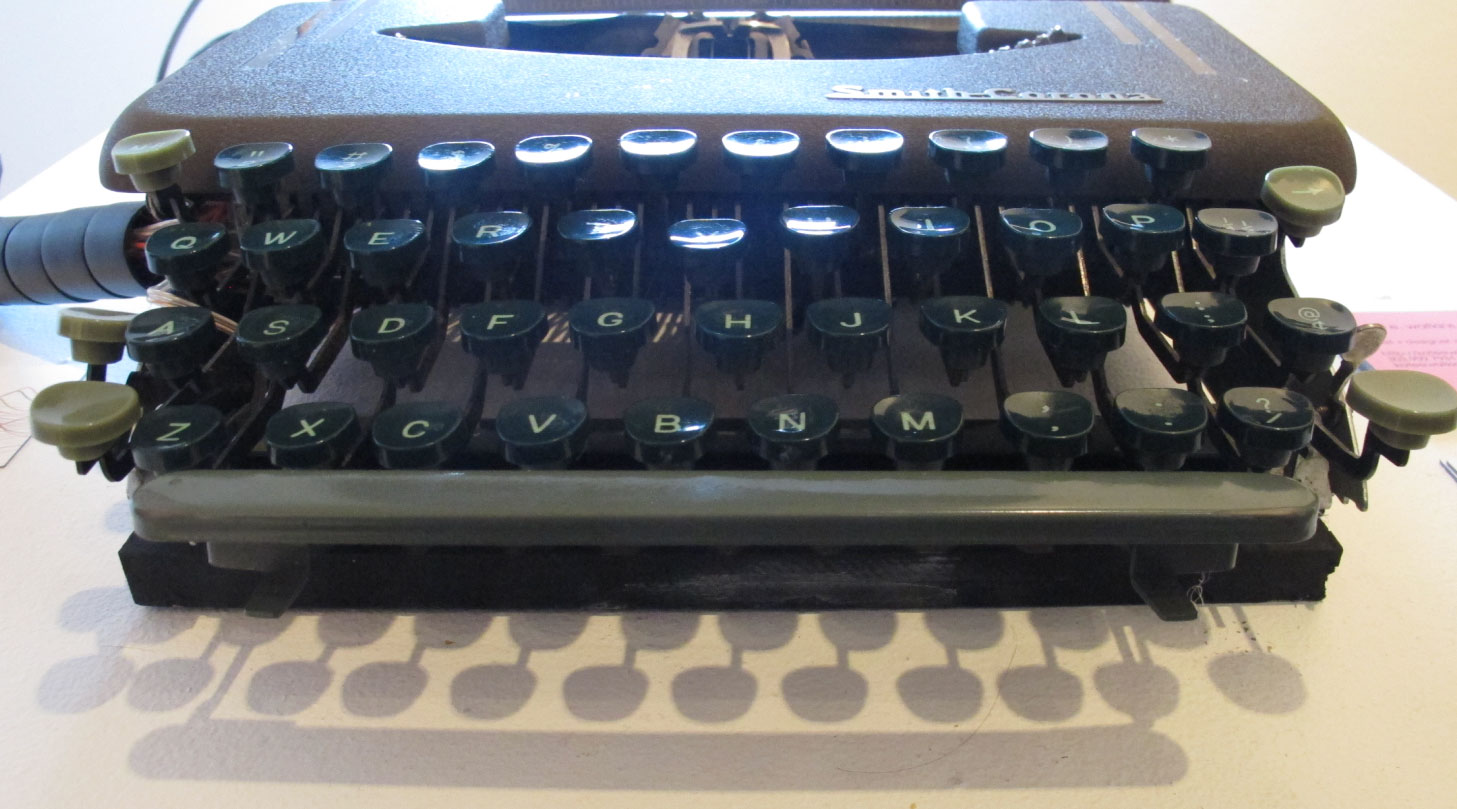

I want to fully explain the different elements that this project consists of and the various ideations that have arisen through my process thus far. The typewriter obviously represents language, specifically spelling out a word, where each letter represents a particular sound and cultural meaning, but it also produces a unique sound as individual keys are pressed. By utilizing the musicality and tangibility of the typewriter buttons, one of my intentions is to create a nice juxtaposition of the mechanical sound with the digital output.

The next element is the digital sound that will be outputted through MIDI protocol language. The remapping of the letter keys into MIDI notes is a vital element involved in the MIDI typewriter system. I recorded the phonemes of each letter, A through Z, and analyzed the frequency by using a method called Autocorrelation. The method compares a signal with versions of itself delayed by successive intervals. The point of comparing delayed versions of a signal is to find repeating patterns, or indicators of periodicities in the signal. Basically, by doing this, I was able to investigate and discover remarkable patterns, through the smallest segmental unit of sound, or letter phonemes, in order to map frequency ranges that can be used for the MIDI notes. It is important to note that each letter is converted to a single frequency or MIDI note, but it’s value or pitch is determined by it’s grouping, in correlation to the other letters. Also, the entire spectrum, from high to low frequency, is contained with one octave and sectioned off between seven groups of letters.

For example, the group with the highest range of frequencies contains the letters: “F, J, S, T, X, and Z.” The second to highest grouping consists of “C, H, K, and Q.” The third group only contains the letter “V” because the autocorrelation spectrum showed drastic results/contrasts from the rest of the letters. The vowels, “A, E, I, O, U,” represent the mid-range frequency group being converted into MIDI notes. The next group that contains a lower range of frequencies, consists of “D, G and Y,” followed by group 7: “B, P, R and W.” The sector of letters containing the lowest range of frequencies is “L, M,and N.”

There are many ways I can manipulate the range of MIDI notes by incorporating other keys on the typewriter. But for initial investigation, I decided to focus on the 3 SHIFT keys, DELETE and SPACE. For this iteration of the MIDI typewriter, I am using the three shift keys to sustain the note pressed. By increasing the delay time and suspending the note until the button is released. SPACE pauses the sound, creating brief moments of silence, which relates to language and how sentences and phrases are broken up through dialog.

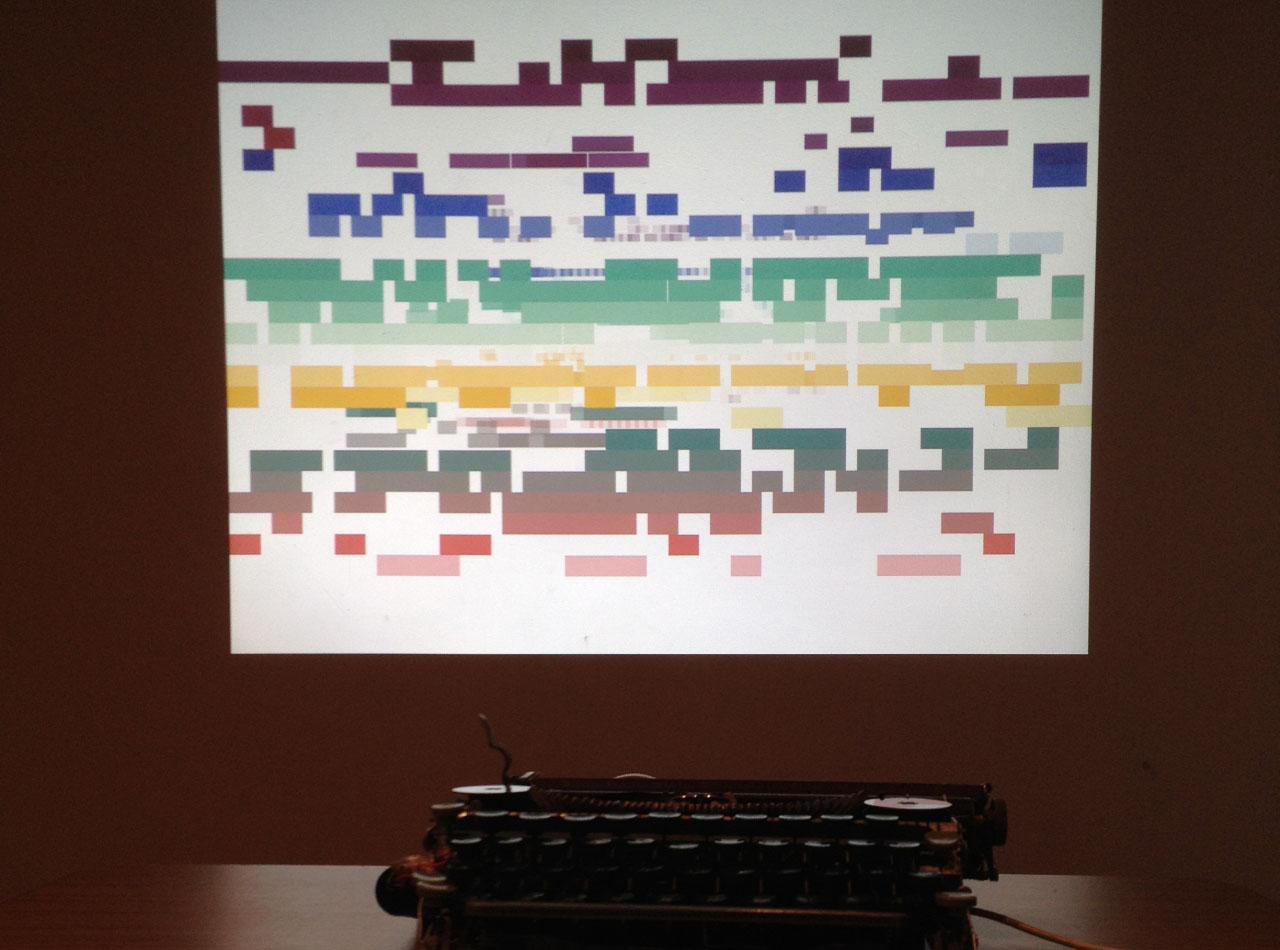

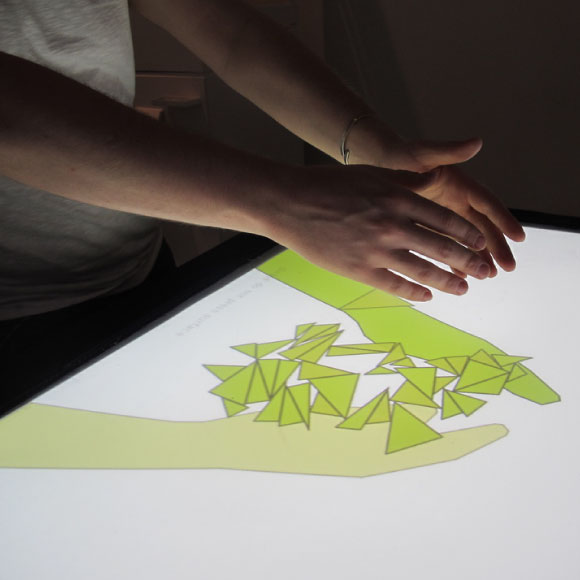

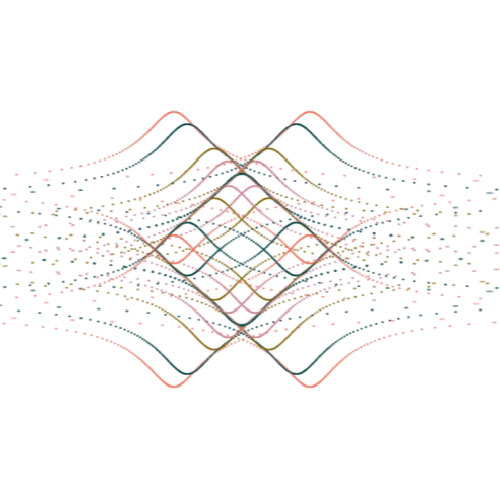

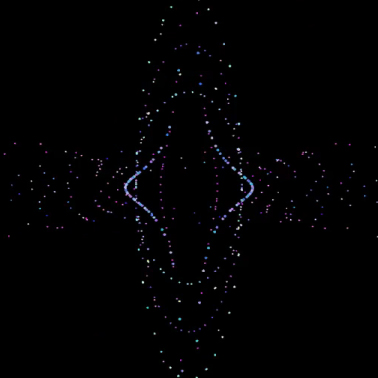

The visual output is another element that is extremely important. Although sound is crucial, the visual representation should accurately guide and help the user understand the mapping of the instrument. By creating a graphical score, the visual output will help connect the meaning between the language that’s being typed with the MIDI notes that will be outputted. So, one of my main investigations for this project is to create a valid and meaningful connection between language and music, and also determining a beneficial way to represent the two as they intersect, both sonically and visually. The third functional key (not including the letters) is the DELETE key, which pushes the visual notation back, along the Z-Axis. The aspect of space comes in with the z depth of the score, makes it a record, but an ephemeral one that will recede over time.

The visuals I decided upon were inspired by a computational MIDI sequencer piano roll notation. My idea was to manipulate this grid structure to display an abstract, but also relatable representation for the user - by mapping frequency - of the letter sounds translated into MIDI notes - with duration.

Video Documentation

Implementation:: Technology

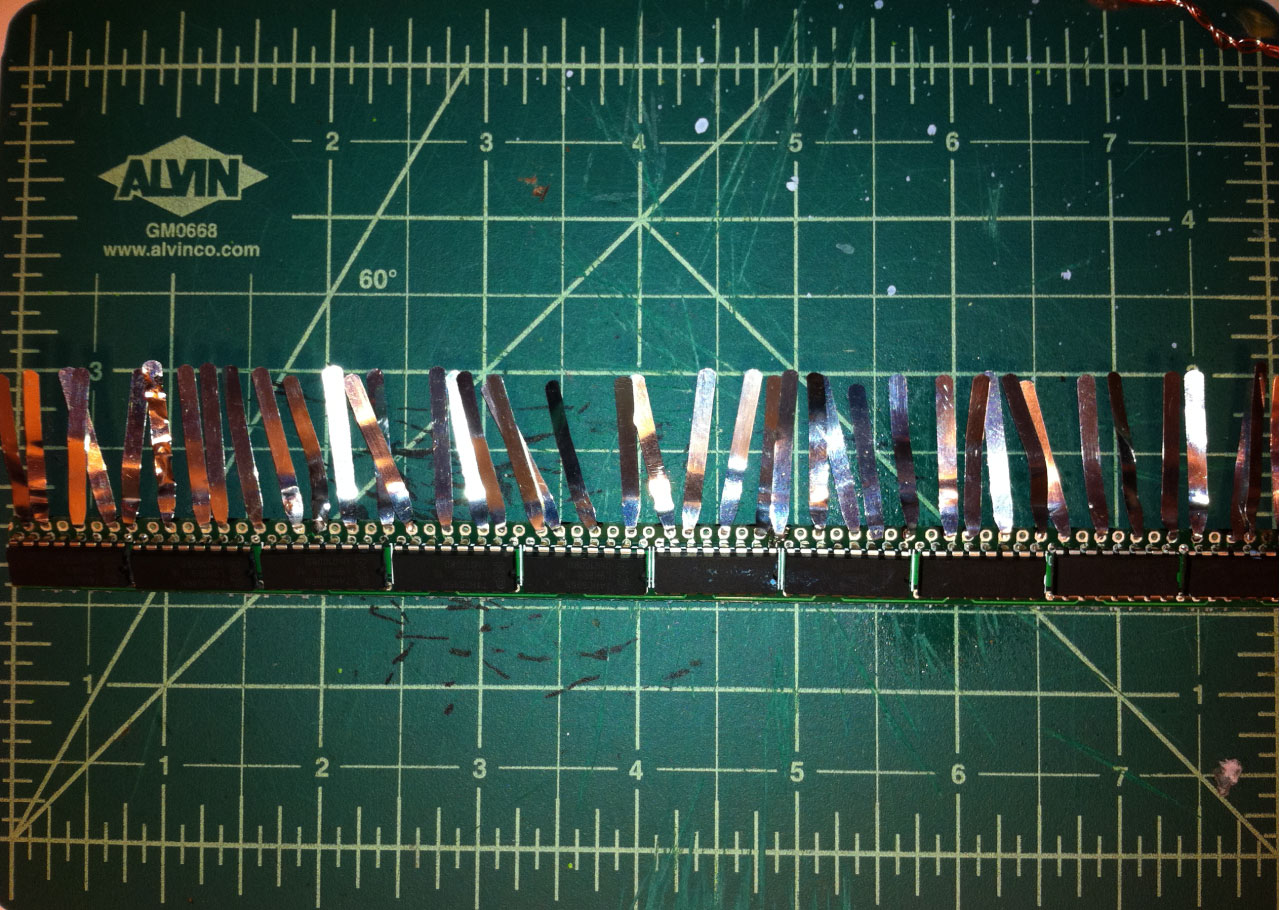

For the implementation of this project, I used a sensor board (etsy), shift registers, and metal contact pins to send a signal to an ATMega Microcontroller that communicates with openFrameworks when a key is pressed. The reference on Etsy was extremely useful because it simplified the process of creating switches for each key on the typewriter. The sensor board reduces clutter and makes the contacts more efficient and stable for each of the letters. The remaining keys work as switches, which communicate to Firmata and on to openFrameworks. But the general mechanism is that when a key is pressed and hits the metal contact pin, the shift register sends that corresponding BYTE number to the ATMega, which in turn transmits this data to openFrameworks, where the visuals are triggered. Once openFrameworks recognizes that a key is pressed, it sends an OSC (Open Sound Communication) message to the programming environment, MAX/MSP, which regulates which MIDI note will be played.

Code resources can be found here.

Selected Works

Crunchbase AI ProfilesProduct Design

Crunchbase Chrome ExtensionProduct Design

Cheddar + News12 CMSProduct Design

News12 Web & App ExperienceProduct Design

Cheddar Content PersonalizationProduct Design

Cheddar tvOS experienceProduct Design

Pinterest Place PinsUI / UX

Visually WorkspaceUI / UX

Audubon Climate ReportUI / UX

Mapzen UI DesignUI Design

Body Metrics, Tech MuseumExperience Design

Little ShadowsMFA Design +Technology Thesis

DSI TableParsons

Midi TypewriterParsons MFA D+T

IllustrationProject type

Algorithmic AnimationOpenframeworks

John WhitneyOpenframeworks